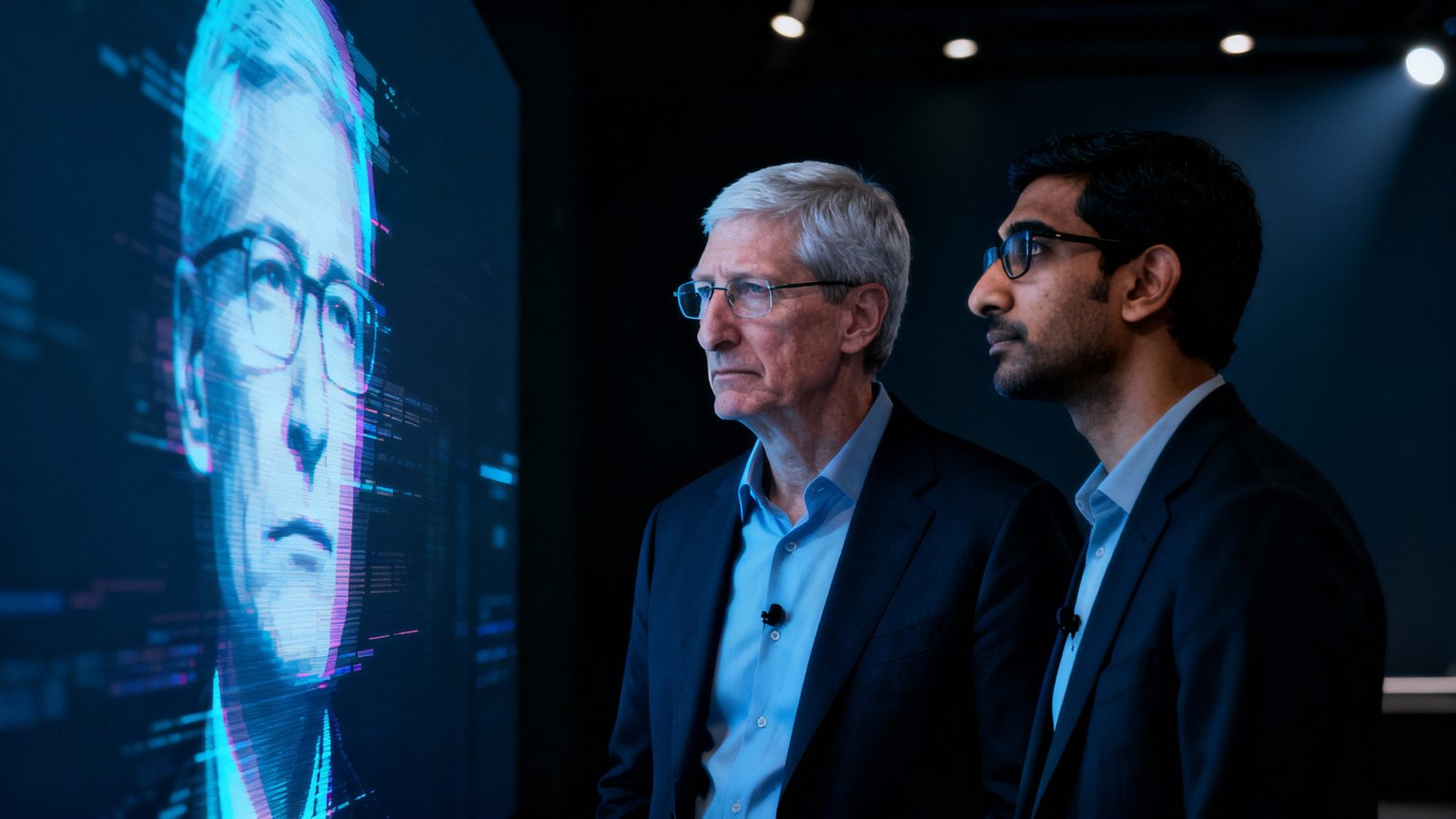

Okay, so let’s just cut to the chase, because honestly, I’m tired of the song and dance. You know that whole deepfake porn mess that exploded on X-formerly-Twitter, the one where people are literally generating non-consensual images, often of women, using AI? Yeah, that one. The thing that’s not just “bad content” but, like, actual digital assault. Here’s the kicker: why are Tim Cook and Sundar Pichai, the big bosses at Apple and Google, suddenly playing coy? Their app stores are the gatekeepers. They have rules. Strict rules. So why is X still sitting pretty on your iPhone and Android?

Where’s the Spine, Guys?

Look, if you’ve been on the internet for more than five minutes, you’ve probably seen the outrage. There’s this Reddit post out there, and it basically calls Cook and Pichai “cowards.” And you know what? It’s hard to argue with that. Because these guys, their companies, they pull apps for way less. Remember that time a minor app developer had a slightly blurry icon? Or when some tiny indie game had a bug that crashed one specific older phone model? Poof. Gone. But X, with its deepfake problem, which, let’s be super clear, is a feature that enables serious harm, just… sails on.

I mean, Apple’s App Store Review Guidelines, section 1.1.6, says, and I’m paraphrasing here, “Apps shouldn’t facilitate illegal activity, promote human trafficking, or generally be a garbage fire of abuse.” Google’s Play Store has similar stuff, right? They’re all about “safety” and “protecting users.” But when it comes to X, suddenly it’s crickets. It’s like they’ve all gone on a silent retreat, which, by the way, is a pretty convenient time to be unavailable when actual human beings are getting digitally violated on a platform they enable.

The Hypocrisy is a Loud Siren

Honestly, the hypocrisy is just… deafening. These companies, Apple especially, love to puff out their chests about their “walled garden” approach. It’s for your protection, they say. It’s to ensure a quality experience. Then something like this drops, something genuinely vile and damaging, and they shrink. They just shrink. It’s not a good look. It’s not a look at all, actually. It’s an absence of a look, like they’re hoping if they don’t make eye contact, the problem will just go away. Spoiler alert: it won’t.

So, Are They Scared of Elon?

This is the question, isn’t it? Is it about money? X still has a ton of users, still generates ad revenue, and Apple and Google take their cut, right? A 30% cut, usually. That’s a lot of cheddar. Is that why? Or is it something else? Is it Elon? The guy’s famously litigious, famously loud, and frankly, kind of unhinged on his own platform. Do Cook and Pichai just not want that fight? Are they thinking, “Nah, we’ll just let the deepfake stuff slide rather than get into a Twitter brawl with the richest guy on Earth?” Because if that’s the case, then who cares about their “values” and “guidelines”? Who cares about the victims?

“It’s not just a content moderation failure; it’s a fundamental breakdown of corporate responsibility when the biggest tech companies enable this kind of abuse for profit or fear.”

The Real Cost of Their Silence

Here’s the meat of it. Their silence, their inaction, it sets a precedent. A really, really bad one. It basically says, “Hey, if you’re big enough, if you’re important enough, you can get away with violating our rules, even if those violations actively harm people.” And what does that mean for the next platform? Or the next AI tool? It’s a green light, isn’t it? It tells every shady developer out there that if they can just get enough users, if they can just make enough noise, Apple and Google will look the other way, even on something as insidious as deepfake sexual imagery.

This isn’t just about X. This is about the entire digital ecosystem. If the two biggest gatekeepers in mobile computing refuse to enforce their own rules against such a clear and present danger, then what are they even there for? Beyond collecting their sweet, sweet percentage, that is. It undermines everything they claim to stand for. It makes their “privacy” and “safety” rhetoric sound like a bad joke.

What This Actually Means

Honestly? It means we’re probably screwed until something forces their hand. I don’t see Tim Cook or Sundar Pichai suddenly growing a backbone and pulling X from their stores voluntarily. Not unless there’s massive, sustained public pressure, maybe some kind of regulatory threat that actually has teeth. Because right now, from where I’m sitting, it looks like they’ve done a very simple cost-benefit analysis:

- Cost of upsetting Elon and losing that 30% cut: Too high.

- Cost of letting deepfake porn proliferate and harming users: Apparently, negligible.

That’s it. That’s the cold, hard calculation. It’s not about what’s right. It’s not about their guidelines. It’s about business, and right now, enabling X’s problematic content seems to be good for their business. And that, my friends, is why we can’t have nice things, or, you know, a safe internet. It’s a pretty depressing thought, isn’t it? What’s it gonna take for these guys to actually step up? I wish I knew. Because it feels like we’re just waiting for the next, even worse, thing to happen before anyone blinks…